Question:

@lertitia Hey, #AskDrEditor, what about readability formulas and indices? Apparently some of my writing is at a 19th grade level. What grade level should an academic writer shoot for?

— Dr LE Doyle 👩🔬🧬 (@RaincityBones) June 24, 2019

Dr. Editor’s response:

There’s no appropriate grade level. Ignore the formulas. They’re inadequate for your needs.

Am I painting with too broad a brush, here? Perhaps I am: there are certain, limited contexts in which I see the value in readability formulas. But, for the most part, they won’t help you with assessing the readability of any academic work that goes through peer review.

Let’s explore the range of readability indices and the ways that they may help you to communicate important concepts clearly.

Accessing and interpreting your readability score

Readability formulas are algorithms designed to assess how easy to read your writing is (or isn’t). Some provide you with the grade level for which your writing is appropriate: newspapers, for example, are supposed to be written for a reading level between Grades 9 to 11, while Donald Trump’s speeches need a Grade 5 level of education to understand (Kayam). Other readability formulas give you a score from zero to 100, with higher numbers indicating increasing ease of reading.

Lots of free websites will auto-calculate the readability score of a piece of text that you cut and paste into their site – I mentioned two of my favourites, Hemingway App and Count Wordsworth, in my March 2019 column. If you use Microsoft Word or Google Docs for your writing, you can use their built-in tools to get your readability level; other commonly used platforms – Apple Pages, Scrivener, and LaTeX—don’t auto-compute readability statistics.

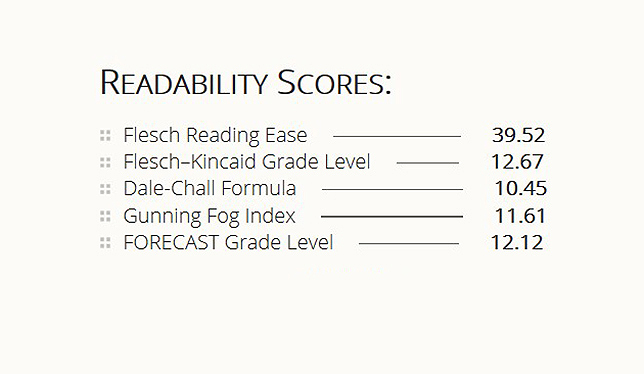

A site like Count Wordsworth will give you multiple different measurements of your text’s readability:

- Flesch Reading Ease is a score out of 100, with zero (hardest) being a Grade 12 reading level, and 100 (easiest) being a Grade 4 reading level.

- Flesch-Kincaid Grade Level is the school year or grade at which your reader must be comfortable in order to understand your text.

- Dale-Chall Formula is a score out of 10, with 4.9 or lower (easiest) being understood by the average Grade 4 reader, and 9.0+ (hardest) being understood by a college-level reader.

- Gunning Fog Index corresponds with grade level (i.e., a score of six is a Grade 6 reading level), with 12 being the maximum recommended score for texts to be read by the general public.

- FORECAST Grade Level – like Flesch-Kincaid and Gunning Fog – has a score that correlates with grade level, but this one was developed for non-narrative texts (e.g., forms, multiple choice texts) and so does not include sentence length in its calculations.

There are at least a half-dozen others. These formulas are often used to calculate the clarity of, for instance, health-care information, but they’ve also been applied to contexts as varied as U.S. State of the Union addresses and website privacy policies.

Below are the readability scores for this very article, as calculated by Count Wordsworth:

By these metrics, I’m writing pretty poorly, and you’re having a hard time understanding what you’re reading. The recommended Flesch Reading Ease score for an online article is in the 60–70 range; I’m at 39.52, which means the description for this text’s writing style is “difficult.” One likely contributor to my poor readability score is the dominance of the word “readability” in this article: at 29 appearances in around 1,000 words, approximately three percent of my words are 11 letters long, and therefore my text must be hard to understand. Right?

Limitations of readability scores

Like any heuristic, readability formulas have limitations. They’re a blunt instrument, built on flawed methods – the belief, for example, that “governmental” is a harder word to read than “bilk,” because “governmental” has four times the number of syllables and three times the number of letters as the shorter word. (“To cheat; to avoid paying,” by the way.)

Similarly, short sentences with short words will receive great readability scores even if they are nonsense. “Mike eats your cat gun now!” is both highly readable and utterly incomprehensible.

A quick scan of the research literature on readability formulas reinforces the legitimacy of these concerns. In the context of patient education materials, Badarudeen and Sabharwal provide a helpful table breaking down the advantages and disadvantages of most of the common readability formulas, noting, for instance, that readability scores can be misleading when one of their indicators is word length: Flesch-Kincaid is “solely based on polysyllable words and long sentences,” and so “may underestimate reading difficulty of medical jargon that may contain short but unfamiliar words” (p.2575). Assessing the usefulness of readability formulas in survey design, Lenzner concludes that readability “formulas’ judgments are often misleading” (p.692), and so recommends that, in contrast to common practice, formulas “should not be used for testing and revising draft [survey] questions” (p.693).

Using readability scales appropriately

Because of the flawed assumptions that underlie their construction, and because of the conclusions of the research assessing these tools, I advise against paying attention to readability formulas in any academic writing context in which you are addressing your peers: not for journal articles, monographs, reviews, or grant applications.

If you’re concerned about your readers’ ability to understand you in these contexts, don’t rely on a flawed assessment of readability. Instead, have a look at the tips on my blog, or invest in hiring an editor.

There are a few contexts in which it may be handy to run your words through a readability checker or other quick-and-dirty assessment of your writing (for instance, the red and green highlighting at Hemingway App), such as:

- quickly written but important student-facing documents: how-to instructions, descriptions of assignments, feedback on assignments;

- lay summaries in grant applications; and,

- lengthy emails.

But targeting your academic writing for a certain grade-level reader? The formulas won’t help you there. I don’t trust their results, and I wouldn’t advise spending time tweaking your writing in an attempt to change a readability score.

This was incredibly helpful during my recent doctorate application process. Thank you!